I started a journey of caring more for my privacy almost 2 years ago. Last year I wrote a series on what that journey was. If you haven’t read that series, here’s the final post on the series which has links to all the posts in the series.

As I concluded that series, I realized that caring for privacy is going to be a life long journey. As Big Tech spreads their tentacles wide and far and we increase our dependency on digital tools (including education for our children), it is important to continue to make privacy focussed decisions on a continuous basis.

So I thought I will publish a post every year summarizing some of the additional steps that I took, what worked and what didn’t.

Here’s the update for 2020.

De-Googling

This has been harder than I thought. Here’s where I stand.

GMail

I still have to use a bit of GMail even though I switched to ProtonMail as my primary email. This is primarily due to few banks and government websites that make it extremely hard to change email addresses on them without either filling a form or visiting them in person. So, this is still a work in progress and hopefully by end of 2021 I can get rid of GMail.

YouTube

Another difficult service to get rid off. Primarily because a whole lot of content that I consume is out there only. Tech review videos, shows (like US Late Night shows), movie trailers are still pretty much YouTube only. So I still do spend time on YouTube but with few tweaks on my behavior:

- I have reduced usage on my mobile drastically and have restricted all the permissions that the app asked for. I am also not “logged in” – though I am pretty sure Google’s algorithm still knows about me with other data they can collect 🙂 I just get some pleasure thinking that I make it difficult for them 🙂

- On the desktop, again, I am not logged into Google by default. I also implement a strategy using “Containers” – which I will talk about later in this post

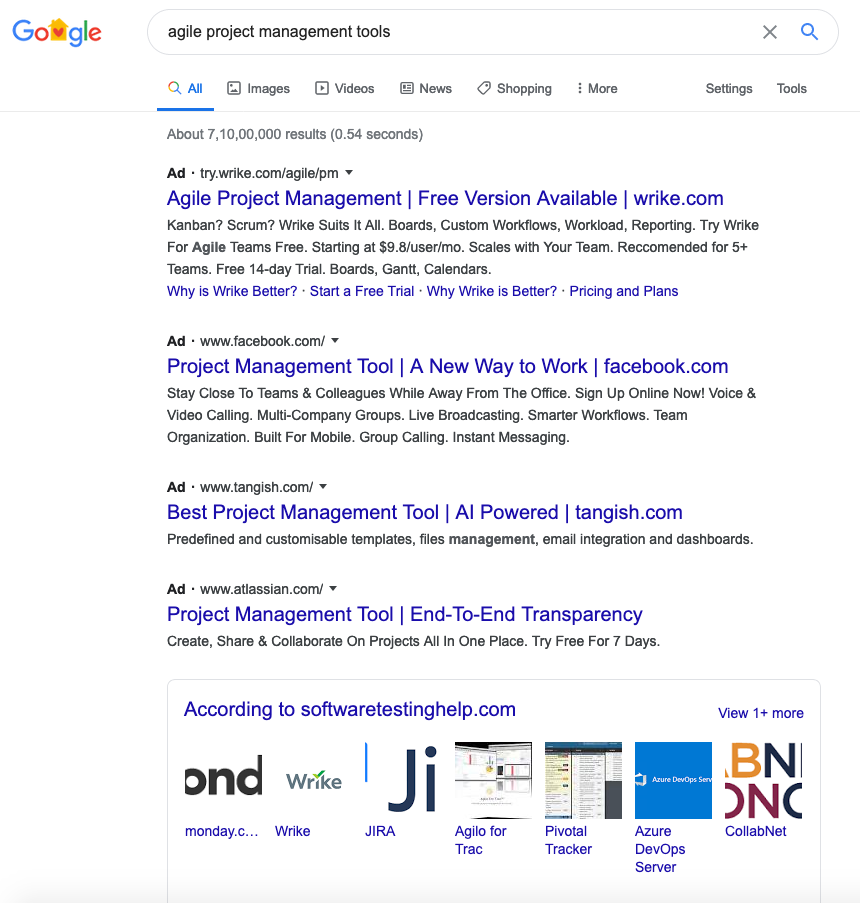

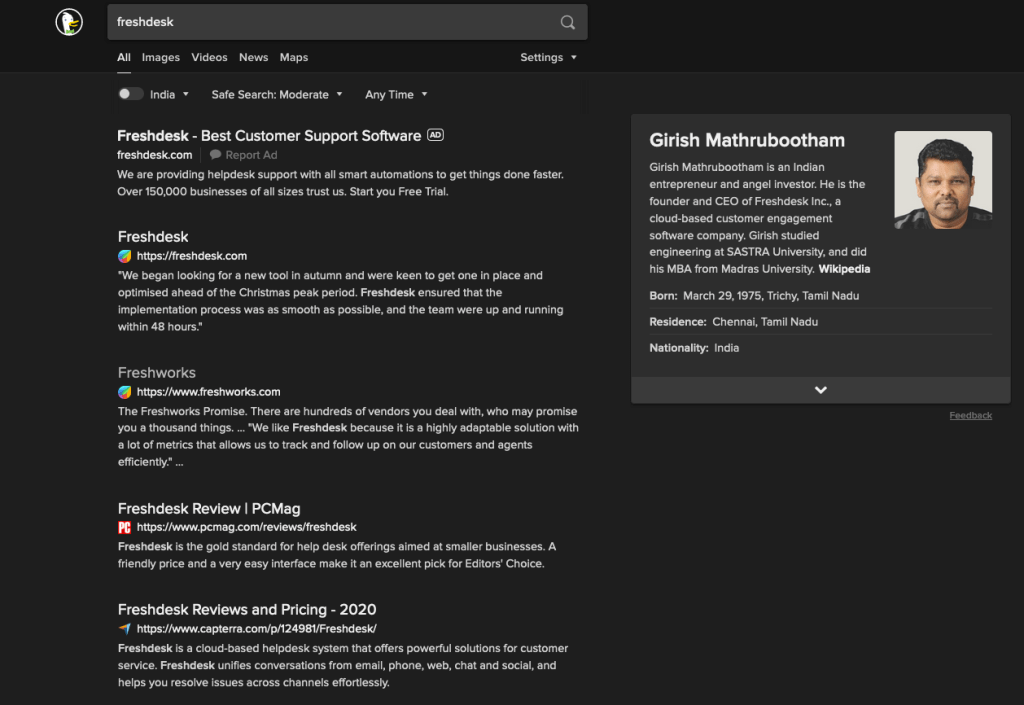

Google Search

I have eliminated this to about 95%. I have been using DuckDuckGo as my primary search engine for more than 18 months now and I have been fine. Except when it comes to some local search – such as looking for local shops. Google still does an amazing job with local search and I am hoping DDG gets better over time.

Here’s one surprising thing though: I still see people in the tech world showing some surprise when they see me using DDG (like “Oh! you use DDG”). Sometimes they also make fun when DDG shows some irrelevant search result while Google picked it up better.

For all those folks: DDG is fine 90-95% of the time. You will be able to find what you are looking for most of the time. It’s just your habit that needs a bit of change and like I mentioned above, you may still not be able to leave Google Search completely. It’s perfectly OK to use multiple Search Engines.

Google Maps

Oh boy! This is the hardest one. They just nailed it here and I still use it as my primary navigation app. Sometimes I also use it as a Search Engine to find address/phone numbers of businesses (when DDG doesn’t give good results).

I am on iOS and haven’t given Apple Maps enough time and attention. I am going to take that up as an action item for 2021 and see how it goes.

If you have been using Apple Maps in India, please let me know in the comments about your experience.

Google Pay / GPay

Nope. I don’t use it at all. Almost everyone in my close network of friends and families use it and they all have told me how easy and simple the experience is. To all those people – I just view GPay as Google’s way of knowing you better in the offline world. So that they can serve better Ads in your online world.

It was pretty hard to live without GPay during the pandemic days where almost every business or even individual told me this: “Can you GPay?”. When I say I don’t use the App, I am being seen as old as dinosaurs. I have resisted so far and I hope I can continue to live without it.

Other Google Products

Apart from these, I don’t use any other Google product.

No to Google Photos, Hangouts (is it Meet?, Duo?, whatever), Calendar, Google App.

None of their other apps are installed on my phone.

Tools

Here are some more tools that I use on a daily basis.

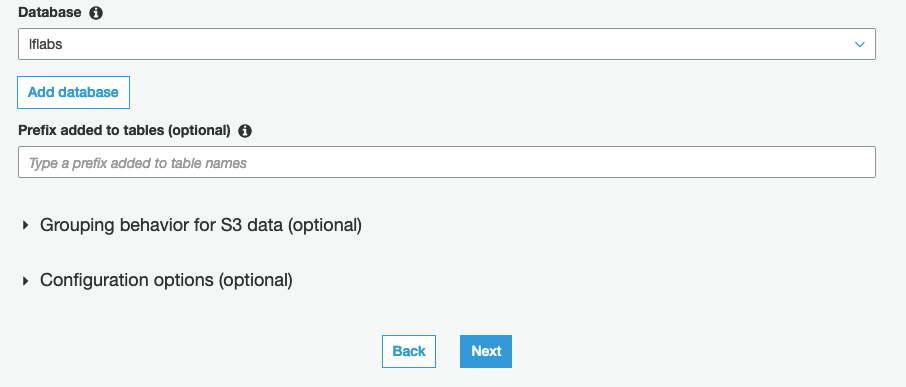

Disposed containers in Firefox

I use Firefox Multi-account Containers heavily. I have created a “Dispose” container and have set “YouTube” to be opened only on this container. I also delete and recreate this container once in couple of weeks.

The reason I do this to make sure YouTube’s algorithm doesn’t get me in to a rabbit hole of watching more and more videos through their recommendations and continue to serve more and more Ads.

I don’t know if it really helps in reducing how much YouTube tracks (given I am also not logged into Google). I will always believe Google’s algorithms are far more sophisticated. But at least this method of using Disposable containers reduces the time that I spend on YouTube.

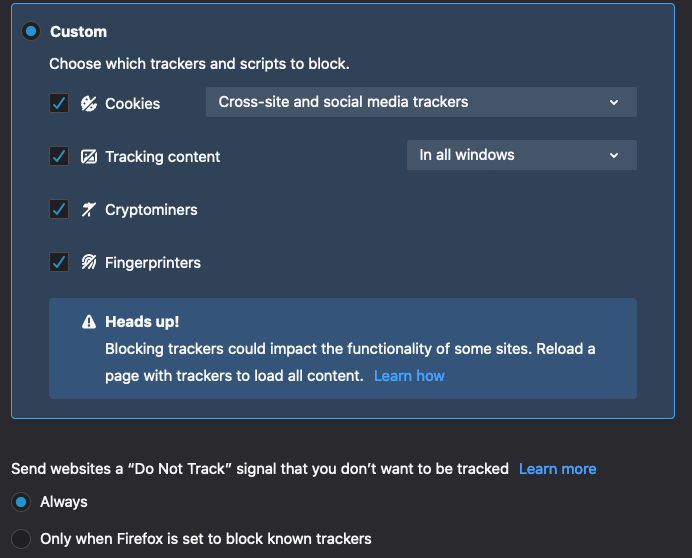

uBlock Origin

I use this Firefox extension to primarily block Ads and trackers. The default list that they provide is good enough. I am yet to experiment with some additional lists that the community has built.

Behaviour Related

I also took some actions to modify some of my behaviors to reduce my digital footprint overall (which can indirectly provide better privacy – if you don’t provide enough data, you don’t have to worry about it :))

- Uninstalled vast majority of apps from the phone. No shopping apps (Amazon, Flipkart, etc..). No social apps (Twitter, LinkedIn, etc…). I do have Twitter installed on my laptop but I set a specific time of the day to look at Twitter for few minutes

- Turned off location access to all other apps. In iOS you can choose “Allow Once” whenever location is required when using an app (such as Google Maps)

- Turned off Notifications for almost all the apps. Especially Whatsapp. I know what you are going to ask next – What if there was some urgent message? They will call 🙂

The Big Privacy Related News of 2020

I know! You don’t want to hear or discuss about this topic anymore in your life. I leave it to your own best judgement to decide for yourself. But I thought I will summarize some of the objections or feedback that I received on switching from Whatsapp to an alternative.

- The top feedback: I don’t want to install another app (from people who have 200 apps on their phone :))

- What’s the guarantee that the alternative doesn’t do what Whatsapp is doing today? (sure, that’s why you got to pick a sane one and be ready to switch out of that in future. If they have a paid model, you should pay them to make sure they aren’t pressurized to monetize your data)

- How does this matter in a country like India where all insurance providers call me (with my fully policy details) exactly few days before my insurance renewal? (I am with you buddy. Let’s chat on Whatsapp :))

- I saw the prompt by Whatsapp for 5 seconds and accepted it already (!!!)

Jokes aside, here are my personal takeaways:

- Whatsapp isn’t going anywhere. But at least this created a bit of more awareness. I am happy that some of the groups that I am in took this opportunity to switch

- It is important for us to realize that it’s perfectly OK to use multiple apps. Especially when we are having private conversations. If we are beginning to get uncomfortable with an app, we should swith to another. And that’s OK

- So many people that I know (who work in tech) are OK with what Whatsapp wanted to do. They didn’t understand that the change was for user-to-business communication and thought it was for all communication that happens in Whatsapp. And they are still OK with it too (even after seeing that comparison screenshot of data collected by different messaging apps). If something like this doesn’t shake them, I wonder what will

Apple’s App Privacy Labels

This is what probably led to the huge Whatsapp controversy. Apple now requires every App developer to report what data is being collected by the App and how its being used. If you visit the App’s listing page on the AppStore, you will see info about what data the app is collecting.

Here’s what Facebook has reported (I am assuming they just did a “Select All” :))

It looks like it’s upto the developer to report what data they are collecting (I am hoping Apple is able to audit as part of their app review process). But it does bring in better transparency and users can make an informed decision while installing an app.

Btw, Google hasn’t yet provided privacy details as of publishing this post (end of January 2021). Here’s the screenshot of the Google app (and pretty much the same case for all of their iOS apps)

Well that’s the update for 2020. And hope 2021 brings in more privacy (and more time out of our homes) in our lives.

Thanks for reading. Let me know your thoughts in the comments section.